TOWARD A MECHANISTIC PSYCHOLOGY OF DIALOGUE

Martin J. Pickering & Simon Garrod

1. INTRODUCTION

2. THE NATURE OF DIALOGUE AND THE ALIGNMENT OF REPRESENTATIONS

2.1 Alignment of situation models is central to successful dialogue

2.2 Achieving alignment of situation models

2.3. Achieving alignment at other levels

2.4 Alignment at one level leads to alignment at another

2.5 Recovery from misalignment

3 THE INTERACTIVE ALIGNMENT MODEL OF DIALOGUE PROCESSING

3.1. Interactive alignment versus autonomous transmission

3.2. Channels of alignment

3.3. Parity between comprehension and production

4 COMMON GROUND, MISALIGNMENT AND INTERACTIVE REPAIR

4.1 Common Ground versus implicit common ground

4.2 Limits on common ground inference

4.3 Interactive repair using implicit common ground

4.4 Interactive repair using full common ground

5 ALIGNMENT AND ROUTINIZATION

5.1 Speaking: Not necessarily from intention to articulation.

5.2 The production of routines

5.2.1 Why do routines occur?

5.2.2 Massive priming in language production

5.2.3 Producing words and sentences

5.3 Alignment in comprehension

6 SELF MONITORING

7.DIALOGUE AND LINGUISTIC REPRESENTATION

7.1 Dealing with linked utterances

7.2 Architecture of the language system

8 DIALOGUE AND MONOLOGUE

8.1 Degree of coupling defines a dialogic continuum

9 IMPLICATIONS

10 SUMMARY AND CONCLUSION

Martin J. Pickering & Simon Garrod

ABSTRACT: 214 words

MAIN TEXT: 18466

REFERENCES: 3482

ENTIRE TEXT: 22539

TOWARD A MECHANISTIC PSYCHOLOGY OF DIALOGUE

Martin J. Pickering

University of Edinburgh

Department of Psychology

7 George Square

Edinburgh EH8 9JZ

United Kingdom

Email: Martin.Pickering@ed.ac.uk

http://www.psy.ed.ac.uk/Staff/academics.html#PickeringMartin

Simon Garrod

University of Glasgow

Department of Psychology

58 Hillhead Street

Glasgow G12 8QT

United Kingdom

Email: simon@psy.gla.ac.uk

http://staff.psy.gla.ac.uk/~simon/

Short Abstract

Traditional mechanistic accounts of language processing derive almost

entirely from the study of monologue. By contrast we propose a mechanistic

account of dialogue, the interactive alignment account. It assumes

that, in dialogue, the linguistic representations employed by the interlocutors

become aligned at many levels, as a result of a largely automatic process.

The process greatly simplifies production and comprehension in dialogue.

It makes use of a simple interactive inference mechanism, enables the development

of local dialogue routines that greatly simplify language processing, and

explains the origins of self-monitoring in production.

Long Abstract

Traditional mechanistic accounts of language processing derive almost

entirely from the study of monologue. Yet, the most natural and basic form

of language use is dialogue. As a result, these accounts may only offer

limited theories of the mechanisms that underlie language processing in

general. We propose a mechanistic account of dialogue, the interactive

alignment account, and use it to derive a number of predictions about

basic language processes. The account assumes that, in dialogue, the linguistic

representations employed by the interlocutors become aligned at many levels,

as a result of a largely automatic process. This process greatly simplifies

production and comprehension in dialogue. After considering the evidence

for the interactive alignment model, we concentrate on three aspects of

processing that follow from it. It makes use of a simple interactive inference

mechanism, enables the development of local dialogue routines that greatly

simplify language processing, and explains the origins of self-monitoring

in production. We consider the need for a grammatical framework that is

designed to deal with language in dialogue rather than monologue, and discuss

a range of implications of the account.

Keywords: dialogue, language processing, common ground, dialogue

routines, language production, monitoring.

1. INTRODUCTION

Psycholinguistics aims to describe the psychological processes underlying

language use. The most natural and basic form of language use is dialogue:

Every language user, including young children and illiterate adults, can

hold a conversation, yet reading, writing, preparing speeches and even

listening to speeches are far from universal skills. Therefore a central

goal of psycholinguistics should be to provide an account of the basic

processing mechanisms that are employed during natural dialogue.

Currently, there is no such account. Existing mechanistic accounts are

concerned with the comprehension and production of isolated words or sentences,

or with the processing of texts in situations where no interaction is possible,

such as in reading. In other words, they rely almost entirely on monologue.

Thus, theories of basic mechanisms depend on the study of a derivative

form of language processing. We argue that such theories are limited and

inadequate accounts of the general mechanisms that underlie processing.

In contrast, this paper outlines a mechanistic theory of language processing

that is based on dialogue, but which applies to monologue as a special

case.

Why has traditional psycholinguistics ignored dialogue? There are probably

two main reasons, one practical and one theoretical. The practical reason

is that it is generally assumed to be too hard or impossible to study,

given the degree of experimental control necessary. Studies of language

comprehension are fairly straightforward in the experimental psychology

tradition - words or sentences are stimuli that can be appropriately controlled

in terms of their characteristics (e.g., frequency) and presentation conditions

(e.g., randomized order). Until quite recently it was also assumed that

imposing that level of control in many language production studies was

impossible. Thus, Bock (1996) points to the problem of "exuberant responsing"

- how can the experimenter stop subjects saying whatever they want? However,

it is now regarded as perfectly possible to control presentation so that

people produce the appropriate responses on a high proportion of trials,

even in sentence production (e.g., Bock, 1986a; Levelt & Maassen, 1981).

Contrary to many people’s intuitions, the same is true of dialogue.

For instance, Branigan, Pickering, and Cleland (2000) showed effects of

the priming of syntactic structure during language production in dialogue

that were exactly comparable to the priming shown in isolated sentence

production (Bock, 1986b) or sentence recall (Potter & Lombardi, 1998).

In Branigan et al.’s study, the degree of control of independent and dependent

variables was no different from in Bock’s study, even though the experiment

involved two participants engaged in a dialogue rather than one participant

producing sentences in isolation. Similar control is exercised in studies

by Clark and colleagues (e.g., Brennan & Clark, 1996; Wilkes-Gibbs

& Clark, 1992; also Brennan & Schober, 2001; Horton & Keysar,

1996). Well-controlled studies of language production in dialogue may require

some ingenuity, but such experimental ingenuity has always been a strength

of psychology.

The theoretical reason is that psycholinguistics has derived most of

its predictions from generative linguistics, and generative linguistics

has developed theories of isolated, decontextualized sentences that are

used in texts or speeches - in other words, in monologue. In contrast,

dialogue is inherently interactive and contextualized: Each interlocutor

both speaks and comprehends during the course of the interaction; each

interrupts both others and himself; on occasion two or more speakers collaborate

in producing the same sentence (Coates, 1990). So it is not surprising

that generative linguists commonly view dialogue as being of marginal grammaticality,

contaminated by theoretically uninteresting complexities. Dialogue sits

ill with the competence/performance distinction assumed by most generative

linguistics (Chomsky, 1965), because it is hard to determine whether a

particular utterance is "well-formed" or not (or even whether that notion

is relevant to dialogue). Thus, linguistics has tended to concentrate on

developing generative grammars and related theories for isolated sentences;

and psycholinguistics has tended to develop processing theories that draw

upon the rules and representations assumed by generative linguistics. So

far as most psycholinguists have thought about dialogue, they have tended

to assume that the results of experiments on monologue can be applied to

the understanding of dialogue, and that it is more profitable to study

monologue because it is "cleaner" and less complex than dialogue. Indeed,

they have commonly assumed that dialogue simply involves chunks of monologue

stuck together.

The main advocate of the experimental study of dialogue is Clark. However,

his primary focus is on the nature of the strategies employed by the interlocutors

rather than basic processing mechanisms. Clark (1996) contrasts the "language-as-product"

and "language-as-action" traditions. The language-as-product tradition

is derived from the integration of information-processing psychology with

generative grammar and focuses on mechanistic accounts of how people compute

different levels of representation. This tradition has typically employed

experimental paradigms and decontextualized language; in our terms, monologue.

In contrast, the language-as-action tradition emphasizes that utterances

are interpreted with respect to a particular context and takes into account

the goals and intentions of the participants. This tradition has typically

considered processing in dialogue using apparently natural tasks (e.g.,

Clark, 1992; Fussell & Krauss, 1992). Whereas psycholinguistic accounts

in the language-as-product tradition are admirably well-specified, they

are almost entirely decontextualized and, quite possibly, ecologically

invalid. On the other hand, accounts in the language-as-action tradition

rarely make contact with the basic processes of production or comprehension,

but rather present analyses of psycholinguistic processes purely in terms

of their goals (e.g., the formulation and use of common ground; Clark,

1985; Clark, 1996; Clark & Marshall, 1981).

This dichotomy is a reasonable historical characterization. Almost all

mechanistic theories happen to be theories of the processing of monologue;

and theories of dialogue are almost entirely couched in intentional non-mechanistic

terms. But this need not be. The goals of the language-as-product tradition

are valid and important; but researchers concerned with mechanisms should

investigate the use of contextualized language in dialogue.

In this paper we propose a mechanistic account of dialogue and use it

to derive a number of predictions about basic language processing. The

account assumes that in dialogue, production and comprehension become tightly

coupled in a way that leads to the automatic alignment of linguistic representations

at many levels. We argue that the interactive alignment process greatly

simplifies language processing in dialogue as compared to monologue. It

does so (1) by supporting a straightforward interactive inference mechanism,

(2) by enabling interlocutors to develop and use routine expressions, and,

(3) by supporting a system for monitoring language processing.

The first part of the paper presents the main argument (sections 2-6).

In section 2 we show how successful dialogue depends on alignment of representations

between interlocutors at different linguistic levels. In section 3 we contrast

the interactive alignment model developed in section 2 with the autonomous

transmission account that underpins current mechanistic psycholinguistics.

Section 4 describes a simple interactive repair mechanism that supplements

the interactive alignment process. We argue that this repair mechanism

can re-establish alignment when interlocutors’ representations diverge

without requiring them to model each other’s mental states. Thus interactive

alignment and repair enable interlocutors to get around many of the problems

normally associated with establishing what Stalnaker (1978) called common

ground. The interactive alignment process leads to the use of routine or

semi-fixed expressions. In section 5 we argue that such ‘dialogue routines’

greatly simplify language production and comprehension by short-circuiting

the decision making processes. Finally in section 6 we discuss how interactive

alignment enables interlocutors to monitor dialogue with respect to all

levels at which they can align.

The second part of the paper explores implications of the interactive

alignment account. In section 7 we discuss implications for linguistic

theory. In section 8 we argue for a graded distinction between dialogue

and monologue in terms of different degrees of coupling between speaker

and listener. In section 9 we argue that the interactive alignment account

may have broader implications in terms of current developments in areas

such as social interaction, language acquisition, and imitation more generally.

Finally, in section 10 we enumerate the differences between the interactive

alignment model developed in the paper and the more traditional autonomous

transmission account of language processing.

2. THE NATURE OF DIALOGUE AND THE ALIGNMENT OF REPRESENTATIONS

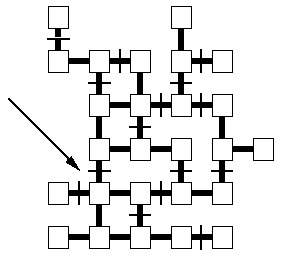

Table 1 shows a transcript of a conversation between two players in

a co-operative maze game (Garrod & Anderson, 1987). In this extract

one player A is trying to describe his position to his partner B

who is viewing the same maze on a computer screen in another room. The

maze is shown in Figure 11.

Table 1. Example dialogue taken from Garrod and Anderson (1987).

1-----B: .... Tell me where you are?

2-----A: Ehm : Oh God (laughs)

3-----B: (laughs)

4-----A: Right : two along from the bottom one up:

5-----B: Two along from the bottom, which side?

6-----A: The left : going from left to right in the second box.

7-----B: You're in the second box.

8-----A: One up :(1 sec.) I take it we've got identical

mazes?

9-----B: Yeah well : right, starting from the left, you're

one along:

10----A: Uh-huh:

11----B: and one up?

12----A: Yeah, and I'm trying to get to ...

[ 28 utterances later ]

41----B: You are starting from the left, you're one along,

one up?(2 sec.)

42----A: Two along : I'm not in the first box, I'm in the second

box:

43----B: You're two along:

44----A: Two up (1 sec.) counting the : if you take :

the first box as being one up :

45----B: (2 sec.) Uh-huh :

46----A: Well : I'm two along, two up: (1.5 sec.)

47----B: Two up ? :

48----A: Yeah (1 sec.) so I can move down one:

49----B: Yeah I see where you are:

* The position being described in the utterances shown in bold is highlighted

with an arrow in Figure 1. Colons mark noticeable pauses of less than 1

second.

Figure 1. Schematic representation of the maze being described in

the conversation shown in Table 1. The arrow points to the position being

described by the utterances marked in bold in the table.

At first glance the language looks disorganized. Many of the utterances

are not grammatical sentences (e.g., only one of the first six contains

a verb). There are occasions when production of a sentence is shared between

speakers, as in (7-8) and (43-44). It often seems that the speakers do

not know how to say what they want to say. For instance, A describes

the same position quite differently in (4), "two along from the bottom

one up," and (46), "two along, two up."

In fact the sequence is quite orderly so long as we assume that dialogue

is a joint activity (Clark, 1996; Clark & Wilkes-Gibbs, 1986). In other

words, it involves cooperation between interlocutors in a way that allows

them to sufficiently understand the meaning of the dialogue as a whole;

and this meaning results from these joint processes. In Lewis’s (1969)

terms, dialogue is a game of cooperation, where both participants "win"

if both understand the dialogue, and neither "wins" if one or both do not

understand.

Conversational analysts argue that dialogue turns are linked across

interlocutors (Sacks, Schegloff & Jefferson, 1974; Schegloff &

Sacks, 1973). A question, such as (1) "Tell me where you are?", calls for

an answer, such as (3) "Two along from the bottom and one up." Even a statement

like (4) "Right, two along from the bottom two up," cannot stand alone.

It requires either an affirmation or some form of query, such as (5) "Two

along from the bottom, which side?" (Linnell, 1998). This means that production

and comprehension processes become coupled. B produces a

question and expects an answer of a particular type; A hears the

question and has to produce an answer of that type. For example, after

saying "Tell me where you are?" in (1), B has to understand "two

along from the bottom one up" in (4) as a reference to A’s position

on the maze; any other interpretation is ruled out. Furthermore, the meaning

of what is being communicated depends on the interlocutors’ agreementorconsensusrather

than on dictionary meanings (Brennan & Clark, 1996) and is subject

to negotiation (Linnell, 1998, p.74). Take for example utterances (4-11)

in the fragment shown above. In utterance (4), A describes his position

as "Two along from the bottom and one up," but the final interpretation

is only established at the end of the first exchange when consensus is

reached on a rather different description by B (9-11) "You're one

along … and one up?" These examples demonstrate that dialogue is far more

coordinated than it might initially appear.

At this point, we should distinguish two notions of coordination that

have become rather confused in the literature. According to one notion

(Clark, 1985), interlocutors are coordinated in a successful dialogue just

as participants in any successful joint activity are coordinated (e.g.,

ballroom dancers, lumberjacks using a two-handed saw). According to the

other notion, coordination occurs when interlocutors share the same representation

at some level (Branigan et al., 2000; Garrod & Anderson, 1987). To

remove this confusion, we refer to the first notion as coordination

and the second as alignment. Specifically, alignment occurs at a

particular level when interlocutors have the same representation at that

level. Dialogue is a coordinated behavior (just like ballroom dancing).

However, the linguistic representations that underlie coordinated dialogue

come to be aligned, as we claim below.

We now argue six points: (1) that alignment of situation models (Zwaan

& Radvansky, 1998) forms the basis of successful dialogue; (2) that

the way that alignment of situation models is achieved is by a primitive

and resource-free priming mechanism; (3) that the same priming mechanism

produces alignment at other levels of representation, such as the lexical

and syntactic; (4) that interconnections between the levels mean that alignment

at one level leads to alignment at other levels; (5) that another primitive

mechanism allows interlocutors to repair misaligned representations interactively;

and (6) that more sophisticated and potentially costly strategies that

depend on modeling the interlocutor’s mental state are only required when

the primitive mechanisms fail to produce alignment. On this basis, we propose

an interactive alignment account of dialogue in the next section..

2.1 Alignment of situation models is central to successful dialogue

A situation model is a multi-dimensional representation of the situation

under discussion (Johnson-Laird, 1983; Sanford & Garrod, 1981; van

Dijk & Kintsch, 1983; Zwaan & Radvansky, 1998). According to Zwaan

and Radvansky, the key dimensions encoded in situation models are space,

time, causality, intentionality, and reference to main individuals under

discussion. They discuss a large body of research which demonstrates that

manipulations of these dimensions affect text comprehension (e.g., people

are faster recognizing that a word had previously been mentioned when that

word referred to something that was spatially, temporally, or causally

related to the current topic). Such models are assumed to capture what

people are "thinking about" while they understand a text, and therefore

are in some sense within working memory (they can be contrasted with linguistic

representations on the one hand and general knowledge on the other).

Most work on situation models has concentrated on comprehension of monologue

(normally, written texts) but they can also be employed in accounts of

dialogue, with interlocutors developing situation models as a result of

their interaction (Garrod & Anderson, 1987). More specifically, we

assume that in successful dialogue, interlocutors develop aligned situation

models. For example, in Garrod and Anderson, players aligned on particular

spatial models of the mazes being described. Some pairs of players came

to refer to locations using expressions like right turn indicator,upside

down T shape, or L on its side. These speakers

represented the maze as an arrangement of patterns or figures. In

contrast, the pair illustrated in the dialogue in Table 1 aligned on a

spatial model in which the maze was represented as a network of paths

linking the points to be described to prominent positions on the maze (e.g.,

the bottom left corner). Pairs often developed quite idiosyncratic spatial

models, but both interlocutors developed the same model (Garrod & Anderson,

1987; Garrod & Doherty, 1994; see also Markman & Makin, 1998).

Alignment of situation models is not necessary in principle for

successful communication. It would be possible to communicate successfully

by representing one’s interlocutor’s situation model, even if that model

were not the same as one’s own. For instance, one player could represent

the maze according to a figure scheme but know that their partner

represented it according to a path scheme, and vice versa. But this

would be wildly inefficient as it would require maintaining two underlying

representations of the situation, one for producing one’s own utterances

and the other for comprehending one’s interlocutor’s utterances. Even though

communication might work in such cases, it is unclear whether we would

claim that the people understood the same thing. More critically, it would

be computationally very costly to have fundamentally different representations.

In contrast, if the interlocutors’ representations are basically the same,

there is no need for listener modeling.

Under some circumstances storing the fact that one’s interlocutors represent

the situation differently than oneself is necessary (e.g., in deception,

or when trying to communicate to one interlocutor information that one

wants to conceal from another). But even in such cases, many aspects of

the representation will be shared (e.g., I might lie about my location,

but would still use a figural representation to do so if that was what

you were using). Additionally, it is clearly tricky to perform such acts

of deception or concealment (Clark & Schaefer, 1987). These involve

sophisticated strategies that do not form part of the basic process of

alignment, and are difficult, because they require the speaker to concurrently

develop two representations.

Of course, interlocutors need not entirely align their situation models.

In any conversation where information is conveyed, the interlocutors must

have somewhat different models, at least before the end of the conversation.

In cases of partial misunderstanding, conceptual models will not be entirely

aligned. In (unresolved) arguments, interlocutors have representations

that cannot be identical. But they must have the same understanding of

what they are discussing in order to disagree about a particular aspect

of it (e.g., Sacks, 1987). For instance, if two people are arguing the

merits of the Conservative versus the Labour parties for the U.K. government,

they must agree about who the names refer to, roughly what the politics

of the two parties are, and so on, so that they can disagree on their evaluations.

In Lewis’s (1969) terms, such interlocutors are playing a game of cooperation

with respect to the situation model (e.g., they succeed insofar as their

words refer to the same entities), even though they may not play such a

game at other "higher" levels (e.g., in relation to the argument itself).

Therefore, we assume that successful dialogue involves approximate alignment

at the level of the situation model at least.

2.2 Achieving alignment of situation models

In theory, interlocutors could achieve alignment of their models through

explicit negotiation, but in practice they normally do not (Brennan &

Clark, 1996; Clark & Wilkes-Gibbs, 1986; Garrod & Anderson, 1987;

Schober, 1993). It is quite unusual for people to suggest a definition

of an expression and obtain an explicit assent from their interlocutor.

Instead, "global" alignment of models seems to result from "local" alignment

at the level of the linguistic representations being used. We propose that

this works via a priming mechanism, whereby encountering an utterance that

activates a particular representation makes it more likely that the person

will subsequently produce an utterance that uses that representation. (On

this conception, priming underpins the alignment mechanism and should not

simply be regarded as a behavioral effect.) In this case, hearing an utterance

that activates a particular aspect of a situation model will make it more

likely that the person will use an utterance consistent with that aspect

of the model. This process is essentially resource-free and automatic.

This was pointed out by Garrod and Anderson (1987) in relation to their

principle of output/input coordination. They noted that in the maze game

task speakers tended to make the same semantic and pragmatic choices that

held for the utterances that they had just encountered. In other words,

their outputs tended to match their inputs at the level of the situation

model. As the interaction proceeded, the two interlocutors therefore came

to align the semantic and pragmatic representations used for generating

output with the representations used for interpreting input. Hence, the

combined system (i.e., the interacting dyad) is completely stable only

if both subsystems (i.e., speaker A’s representation system and

speaker B’s representation system) are aligned. In other words,

the dyad is only in equilibrium when what A says is consistent with

B’s currently active semantic and pragmatic representation of the

dialogue and vice versa (see Garrod & Clark, 1993). Thus, because the

two parties to a dialogue produce aligned language, the underlying linguistic

representations also tend to become aligned. In fact, the output/input

coordination principle applies more generally. Garrod and Anderson also

assumed that it held for lexical representations. We argue that alignment

holds at a range of levels, including the situational model and the lexical

level, but also at other levels, such as the syntactic, as discussed in

section 2.3, and "percolates" between levels, as discussed in section 2.4.

Other work suggests that specific dimensions of situation models can

be aligned. With respect to the spatial dimension, Schober (1993) found

that interlocutors tended to adopt the same reference frame as each other.

When interlocutors face each other, terms like on the left are ambiguous

depending on whether the speaker takes what we can call an egocentric or

an allocentric reference frame. Schober found that if, for instance, A

said on the left meaning on A’s left (i.e., an egocentric

reference frame), then B would subsequently describe similar locations

as on the right (also taking an egocentric frame of reference).

Other evidence for priming of reference frames comes from experiments conducted

outside dialogue (which involve the same priming mechanism on our account).

Thus, Carlson-Radvansky and Jiang (1998) found that people responded faster

on a sentence-picture verification task if the reference frame (in this

case, egocentric vs. intrinsic to the object) used on the current trial

was the same as the reference frame used on the previous trial.2

So far we have assumed that the different components of the situation

model are essentially separate (in accord with Zwaan & Radvansky, 1998),

and that they can be primed individually. But in a particularly interesting

study, Boroditsky (2000) found that the use of a temporal reference frame

can be primed by a spatial reference frame. Thus, if people had just verified

a sentence describing a spatial scenario that assumed a particular frame

of reference (in her terms, ego moving or object moving), they tended to

interpret a temporal expression in terms of an analogous frame of reference.

Her results demonstrate priming of a structural aspect of the situation

model that is presumably shared between the spatial and temporal dimensions

at least. Indeed, work on analogy more generally suggests that it should

be possible to prime abstract characteristics of the situation model (e.g.,

Markman & Gentner, 1993; Gentner & Markman, 1997), and that such

processes should contribute to alignment in dialogue.

There is some evidence for alignment of situation models in comprehension.

Garrod and Anderson (1987) found that players in the maze game would query

descriptions from an interlocutor that did not match their own previous

descriptions (see section 4 below). Recently, Brown-Schmitt, Campana, and

Tanenhaus (in press) have provided direct and striking evidence for alignment

in comprehension. Previous work has shown that eye movements during scene

perception are a strong indication of current attention, and that they

can be used to index the rapid integration of linguistic and contextual

information during comprehension (Chambers, Tanenhaus, Eberhard, Filip,

& Carlson, 2002; Tanenhaus, Spivey-Knowlton, Eberhard, & Sedivy,

1995). Brown-Schmitt et al. monitored eye movements during unscripted dialogue,

and found that the entities considered by the listener directly reflected

the entities being considered by the speaker at that point. For instance,

if the speaker used a referring expression that was formally ambiguous

but which the speaker used to refer to a specific entity (and hence regarded

as disambiguated), the listener also looked at that entity. Hence, whatever

factors were constraining the speaker’s situation model were also constraining

the listener’s situation model.

2.3 Achieving alignment at other levels

Dialogue transcripts are full of repeated linguistic elements and structures

indicating alignment at various levels in addition to that of the situation

model (Aijmer, 1996; Schenkein, 1980; Tannen, 1989). Alignment of lexical

processing during dialogue was specifically demonstrated by Garrod and

Anderson (1987) as in the extended example in Table 1 (see also Garrod

& Clark, 1993; Garrod & Doherty, 1994), and by Clark and colleagues

(Brennan & Clark, 1996; Clark & Wilkes-Gibbs, 1986; Wilkes-Gibbs

& Clark, 1992). These latter studies show that interlocutors tend to

develop the same set of referring expressions to refer to particular objects

and that the expressions become shorter and more similar on repetition

with the same interlocutor and are modified if the interlocutor changes.

Levelt and Kelter (1982) found that speakers tended to reply to "What

time do you close?" or "At what time do you close" (in Dutch) with a congruent

answer (e.g., "Five o’clock" or "At five o’clock"). This alignment may

be syntactic (repetition of phrasal categories) or lexical (repetition

of at). Branigan et al. (2000) found clear evidence for syntactic

alignment in dialogue. Participants took it in turns to describe pictures

to each other (and to find the appropriate picture in an array). One speaker

was actually a confederate of the experimenter and produced scripted responses,

such as "the cowboy offering the banana to the robber" or "the cowboy offering

the robber the banana." The syntactic structure of the confederate’s description

strongly influenced the syntactic structure of the experimental subject’s

description. Their work extends "syntactic priming" work to dialogue: Bock

(1986b) showed that speakers tended to repeat syntactic form under circumstances

in which alternative non-syntactic explanations could be excluded (Bock,

1989; Bock & Loebell, 1990; Bock, Loebell, & Morey, 1992; Hartsuiker

& Westenberg, 2000; Pickering & Branigan, 1998; Potter & Lombardi,

1998; cf. Smith & Wheeldon, 2001, and see Pickering & Branigan,

1999, for a review).

Branigan et al.’s (2000) results support the claim that priming activates

representations and not merely procedures that are associated with production

(or comprehension) - in other words, that the explanation for syntactic

priming effects is closely related to the explanation of alignment in general.

This suggests an important "parity" between the representations used in

production and comprehension (see Section 3.2). Interestingly, Branigan

et al. (2000) found very large priming effects compared to the syntactic

priming effects that occur in isolation. There are two reasons why this

might be the case. First, a major reason why priming effects occur is to

facilitate alignment, and therefore that they are likely to be particularly

strong during natural interactions. In Branigan et al. (2000), participants

respond at their own pace, which should be "natural," and hence conducive

to strong priming. Second, we would be expect interlocutors to have their

production systems highly activated even when listening, because they have

to be constantly prepared to become the speaker, whether by taking the

floor or simply making a backchannel contribution.

If syntactic alignment is due, in part, to the interactional nature

of dialogue, then the degree of syntactic alignment should reflect the

nature of the interaction between speaker and listener. As Clark and Schaeffer

(1987; see also Schober & Clark, 1989; Wilkes-Gibbs & Clark, 1992)

have demonstrated, there are basic differences between addressees and other

listeners. So we might expect stronger alignment for addressees than for

other listeners. To test for this, Branigan, Pickering, and Cleland (2002)

had two speakers take it in turns to describe cards to a third person,

so the two speakers heard but did not speak to each other. Priming occurred

under these conditions, but it was weaker than when two speakers simply

responded to each other. Hence, syntactic alignment is affected by speaker

participation in dialogue. Although, we would claim, the same representations

are activated under these conditions as during dyadic interaction, the

closeness of dyadic interaction means that it leads to stronger priming.

For instance, we assume that the production system is active (and hence

is ready to produce an interruption) when the addressee is listening to

the speaker. By contrast, Branigan et al.'s (2002) side participant is

not in a position to make a full contribution, and hence does not need

to activate his production system to the same extent.

Alignment also occurs at the level of articulation. It has long been

known that as speakers repeat expressions, articulation becomes increasingly

reduced (i.e., the expressions are shortened and become more difficult

to recognize when heard in isolation; Fowler & Housum, 1987). However,

Bard et al. (2000) found that reduction was just as extreme when the repetition

was by a different speaker in the dialogue as it was when the repetition

was by the original speaker. In other words, whatever is happening to the

speaker’s articulatory representations is also happening to their interlocutor’s.

There is also evidence that interlocutors align accent and speech rate

(Giles, Coupland, & Coupland, 1992; Giles & Powesland, 1975).

Finally, there is some evidence for alignment in comprehension. Levelt

and Kelter (1982, Experiment 6) found that people judged question-answer

pairs involving repeated form as more natural than pairs that did not;

and that the ratings of naturalness were highest for the cases where there

was the strongest tendency to repeat form. This suggests that speakers

prefer their interlocutors to respond with an aligned form.

2.4 Alignment at one level leads to alignment at another

So far, we have concluded that successful dialogue leads to the development

of both aligned situation models and aligned representations at all other

linguistic levels. There are good reasons to believe that this is not coincidental,

but rather that aligned representations at one level lead to aligned representations

at other levels.

Consider the following two examples of influences between levels. First,

Garrod and Anderson (1987) found that once a word had been introduced with

a particular interpretation it was not normally used with any other interpretation

in a particular stretch of maze-game dialogue. For instance, the word row

could refer either to an implicitly ordered set of horizontal levels of

boxes in the maze (e.g., with descriptions containing an ordinal like "I’m

on the fourth row") or to an unordered set of levels (e.g., with descriptions

that do not contain ordinals like "I’m on the bottom row").3

Speakers who had adopted one of these local interpretations of rowand

needed to refer to the other would introduce a new term, such as line

or level. Thus, they would talk of the fourth row and the

bottom line, but not the fourth row and the bottom row

(see Garrod & Anderson, 1987 p. 202). In other words, aligned use of

a word seemed to go with a specific aligned interpretation of that word.

Restricting usage in this way allows dialogue participants to assume quite

specific unambiguous interpretations for expressions. Furthermore, if a

new expression is introduced they can assume that it would have a different

interpretation from a previous expression, even if the two expressions

are "dictionary synonyms." This process leads to the development of a lexicon

of expressions relevant to the dialogue (see section 5). What interlocutors

are doing is acquiring new senses for words or expressions. To do this,

they use the principle of contrast just like children acquiring language

(e.g., E.V. Clark, 1993).

Second, it has been shown repeatedly that priming at one level can lead

to more priming at other levels. Specifically, syntactic alignment (or

"syntactic priming") is enhanced when more lexical items are shared. In

Branigan et al.’s (2000) study, the stooge produced a description using

a particular verb (e.g., the nun giving the book to the clown).

Some experimental subjects then produced a description using the same verb;

whereas other subjects produced a description using a different verb. Syntactic

alignment was considerably enhanced if the verb was repeated (as also happens

in monologue; Pickering & Branigan, 1998). Thus, interlocutors do not

align representations at different linguistic levels independently. Likewise,

Cleland and Pickering (2002) found people tended to produce noun phrases

like the sheep that’s red as opposed to the red sheep more

often after hearing the goat that’s red than after the book that’s

red. This demonstrates that semantic relations between lexical items

enhance syntactic priming.

These effects can be modeled in terms of a lexical representation outlined

in Pickering and Branigan (1998). A node representing a word (i.e., its

lemma; Levelt, Roelofs, & Meyer, 1999; cf. Kempen & Huijbers, 1983)

is connected to nodes that specify its syntactic properties. So, the node

for give is connected to a node specifying that it can be used with

a noun phrase and a prepositional phrase. Processing giving the book

to the clown activates both of these nodes and therefore makes them

both more likely to be employed subsequently. However, it also strengthens

the link between these nodes, on the principle that coactivation strengthens

association. Thus, the tendency to align at one level, such as the syntactic,

is enhanced by alignment at another level, such as the lexical. Cleland

and Pickering’s (2002) finding demonstrates that exact repetition at one

level is not necessary: the closer the relationship at one level (e.g.,

the semantic), the stronger the tendency to align at the other (e.g., the

syntactic). Note that we can make use of this tendency to determine which

specific levels are linked.

In comprehension, there is evidence for parallelism at one level occurring

more when there is parallelism at another level. Thus, pronouns tend to

be interpreted as coreferential with an antecedent in the same grammatical

role (e.g., "William hit Oliver and Rod slapped him" is interpreted as

Rod slapping Oliver) (Sheldon, 1974; Smyth, 1994). Likewise, the likelihood

of a gapping interpretation of an ambiguous sentence is greater if the

relevant arguments are parallel (e.g., "Bill took chips to the party and

Susan to the game" is often given an interpretation where Susan took chips

to the game) (Carlson, 2001). Finally, Gangé and Shoben (2002; cf.

Gangé, 2001) found evidence that interpreting a compound as having

a particular semantic relation (e.g., type of doctor in adolescent doctor)

was facilitated by prior interpretation of a compound containing either

the same noun or adjective which used the same relation (e.g., adolescent

magazine or animal doctor). These effects have only been demonstrated

in reading, but we would also expect them to occur in dialogue.

The mechanism of alignment and in particular the percolation of alignment

between levels has a very important consequence that we discuss in section

5. Interlocutors will tend to align expressions at many different levels

at the same time.4

When all levels are aligned, interlocutors will repeat each others’ expressions

in the same way (e.g., with the same intonation). Hence, dialogue should

be highly repetitive, and should make extensive use of fixed expressions.

Importantly, fixed expressions should be established during the dialogue,

so that they become dialogue routines.

2.5 Recovery from misalignment

Of course, these primitive processes of alignment are not foolproof.

For example, interlocutors might align at a "superficial" level but not

at the level of the situation model (e.g., if they both refer to John

but do not realize that they are referring to different Johns). In such

cases, interlocutors need to be able to appeal to other mechanisms in order

to establish or reestablish alignment. The account is not complete until

we outline such mechanisms, which we do in section 4 below. For now, we

simply assume that such mechanisms exist and are needed to supplement the

basic process of alignment.

3. THE INTERACTIVE ALIGNMENT MODEL OF DIALOGUE PROCESSING

The interactive alignment model assumes that successful dialogue involves

the development of aligned representations by the interlocutors. This occurs

by priming mechanisms at each level of linguistic representation, by percolation

between the levels so that alignment at one level enhances alignment at

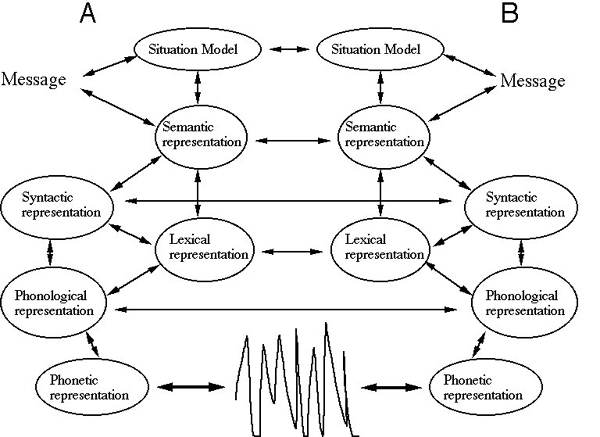

other levels, and by repair mechanisms when alignment goes awry. Figure

2 illustrates the process of alignment in fairly abstract terms. It shows

the levels of linguistic representation computed by two interlocutors and

ways in which those representations are linked. Critically, Figure 2 includes

links between the interlocutors at multiple levels.

Figure 2. A and B represent two interlocutors

in a dialogue in this schematic representation of the stages of comprehension

and production processes according to the interactive alignment model.

The details of the various levels of representation and interactions between

levels are chosen to illustrate the overall architecture of the system

rather than to reflect commitment to a specific model.

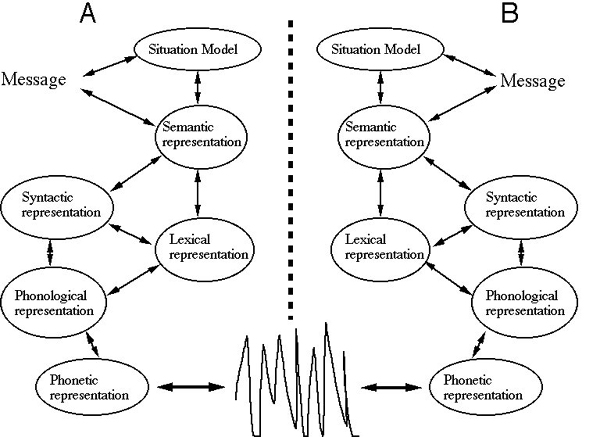

In this section, we elucidate the figure in three ways. First, we contrast

it with a more traditional "autonomous transmission" account, as represented

in Figure 3, where multiple links between interlocutors do not exist. Second,

we interpret these links as corresponding to channels whereby priming occurs.

Finally, we argue that the bi-directional nature of the links means that

there must be parity between production and comprehension processes.

Figure 3. A and B represent two interlocutors

in a dialogue in this schematic representation of the stages of comprehension

and production processes according to the autonomous transmission account.

The details of the various levels of representation and interactions between

levels are chosen to illustrate the overall architecture of the system

rather than to reflect commitment to a specific model.

3.1. Interactive alignment versus autonomous transmission

In the autonomous transmission account, the transfer of information

between producers and comprehenders takes place via decoupled production

and comprehension processes that are "isolated" from each other (see Fig.

3). The speaker (or writer) formulates an utterance on the basis of his

representation of the situation. Crudely, a non-linguistic idea or "message"

is converted into a series of linguistic representations, with earlier

ones being syntactic, and later ones being phonological. The final linguistic

representation is converted into an articulatory program, which generates

the actual sound (or hand movements) (e.g., Levelt, 1989). Each intermediate

representation serves as a "way station" on the road to production — its

significance is internal to the production process. Hence there is no reason

for the listener to be affected by these intermediate representations.

In turn, the listener (or reader) decodes the sound (or movements) by

converting the sound into successive levels of linguistic representation

until the message is recovered (if the communication is successful). He

then infers what the speaker (or writer) intended on the basis of

his autonomous representation of the situation. So, from a processing point

of view, speakers and listeners act in isolation . The only link between

the two is in the information conveyed by the utterances themselves (Cherry,

1956). Each act of transmission is treated as a discrete stage, with a

particular unit being encoded into sound by the speaker, being transmitted

as sound, and then being decoded by the listener. Levels of linguistic

representation are constructed during encoding and decoding, but there

is no particular association between the levels of representation used

by the speaker and listener. Indeed, there is even no reason to assume

that the levels will be the same, nor that assumptions about the levels

involved in comprehension should constrain those assumed in production,

or vice versa. Hence, Figure 3 could just as well involve different levels

of representation for speaker and listener.

The autonomous transmission model is not appropriate for dialogue because,

in dialogue, production and comprehension processes are coupled (Garrod,

1999). In formulating an utterance the speaker is guided by what has just

been said to him and in comprehending the utterance the listener is constrained

by what he has just said, as in the example dialogue in Table 1. The interlocutors

build up utterances as a joint activity (Clark, 1996), with interlocutors

often interleaving production and comprehension tightly. They also align

at many different levels of representation, as discussed in Section 2.

Thus, in dialogue each level of representation is causally implicated in

the process of communication and these intermediate representations are

retained implicitly. Because alignment at one level leads to alignment

at others, the interlocutors come to align their situation models and hence

are able to understand each other. This follows from the interactive alignment

model described in Figure 2, but is not reflected in the autonomous transmission

account in Figure 3.

3.2. Channels of alignment

The horizontal links in Figure 2 correspond to channels by which alignment

takes place. The communication mechanism used by these channels is priming.

Thus, we assume that lexical priming leads to the alignment at the lexical

level, syntactic priming leads to alignment at the syntactic level, and

so on. Although fully specified theories of how such priming operates are

not available for all levels, sections 2.2 and 2.3 described some of the

evidence to support priming at these levels, and detailed mechanisms of

priming are proposed in many of the papers referred to there. As an example,

Branigan et al. (2000) provided an account of syntactic alignment in dialogue

that involved priming of syntactic information at the lemma stratum. Because

channels of alignment are bi-directional, the model predicts that if evidence

is found for alignment in one direction (e.g., from comprehension to production)

it should also be found for alignment in the other (e.g., from production

to comprehension). Of course, the linguistic information conveyed by the

channels is encoded in sound.

Critically, these channels are direct and automatic (as implied by the

term "priming"). In other words, the activation of a representation in

one interlocutor leads to the activation of the matching representation

in the other interlocutor directly. There is no intervening "decision box"

where the listener makes a decision about how to respond to the "signal."

Although such decisions do of course take place during dialogue (see Section

4 below), they do not form part of the basic interactive alignment process

which is automatic and largely unconscious. We assume that such channels

are similar to the direct and automatic perception-behavior link that has

been proposed to explain the central role of imitation in social interaction

(Bargh & Chartrand, 1999; Dijksterhuis & Bargh, 2001).

Figure 2 therefore indicates how interlocutors can align in dialogue

via the interactive alignment model. It does not of course provide an account

of communication in monologue, but the goal of monologue is not to get

to aligned representations. Instead, the listener attempts to obtain a

specific representation corresponding to the speaker’s message, and the

speaker attempts to produce the appropriate sounds that will allow the

listener to do this. Moreover, in monologue (including writing), the speaker’s

and the listener’s representations can rapidly diverge (or never align

at all). The listener then has to draw inferences on the basis of his knowledge

about the speaker, and the speaker has to infer what the listener has inferred

(or simply assume that the listener has inferred correctly). Of course,

either party could easily be wrong, and these inferences will often be

costly. In monologue, the automatic mechanisms of alignment are not present

(the consequences for written production are demonstrated in Traxler &

Gernsbacher, 1992, 1993). It is only when regular feedback occurs that

the interlocutors can control the alignment process.

The role of priming is very different in dialogue from monologue. In

monologue, it can largely be thought of as an epiphenomenal effect, which

is of considerable use to psycholinguists as a way of investigating representation

and process, but of little importance in itself. However, our analysis

of dialogue demonstrates that priming is the central mechanism in the process

of alignment and mutual understanding. Thus dialogue indicates the important

functional role of priming. In conclusion, we regard priming as underlying

the links between the two sides of Figure 2, and hence the mechanism that

drives interactive alignment.

3.3 Parity between comprehension and production

On the autonomous transmission account, the processes employed in production

and comprehension need not draw upon the same representations (see Fig.

3). By contrast, the interactive alignment model assumes that the processor

draws upon the same representations (see Fig. 2). This parity

means that a representation that has just been constructed for the purposes

of comprehension can then be used for production (or vice versa). This

straightforwardly explains, for instance, why we can complete one another’s

utterances (and get the syntax, semantics, and phonology correct; see section

7.1). It also serves as an explanation of why syntactic priming in production

occurs when the speaker has only heard the prime (Branigan et al., 2000;

Potter & Lombardi, 1998), as well as when he has produced the prime

(Bock, 1986b; Pickering & Branigan, 1998).

The notion of parity of representation is controversial but has been

advocated by a wide range of researchers working in very different domains

(Calvert et al., 1997; Liberman & Whalen, 2000; MacKay, 1987; Mattingly

& Liberman, 1988). For example, Goldinger (1998) demonstrated that

speech ‘shadowers’ imitate the perceptual characteristics of a shadowed

word (i.e., their repetition is judged acoustically more similar to the

shadowed word than to another production of the same word by the shadower).

He argued that this vocal imitation in shadowing strongly suggests an underlying

perception-production link at the phonological level.

Parity is also increasingly advocated as a means of explaining perception/action

interactions outside language (Hommel, Müsseler, Aschersleben, &

Prinz, 2001). We return to this issue in section 8. Note that parity only

requires that the representations be the same. The processes leading to

those representations need not be related (e.g., there is no need for the

mapping between representations to be simply reversed in production and

comprehension).

4. COMMON GROUND, MISALIGNMENT, AND INTERACTIVE REPAIR

In current research on dialogue, the key conceptual notion has been

"common ground" which refers to background knowledge shared between the

interlocutors (Clark & Marshall, 1981). Traditionally, most research

on dialogue has assumed that interlocutors communicate successfully when

they share a common ground, and that one of the critical preconditions

for successful communication is the establishment of common ground (Clark

& Wilkes-Gibbs, 1986). Establishment of common ground involves a good

deal of modeling of one’s interlocutor’s mental state. In contrast, our

account assumes that alignment of situation models follows from lower-level

alignment, and is therefore a much more automatic process. We argue that

interlocutors align on what we term an implicit common ground, and only

go beyond this to a (full) common ground when necessary. In particular,

interlocutors draw upon common ground as a means of repairing misalignment

when more straightforward means of repair fail.

4.1 Common ground versus implicit common ground

Alignment between interlocutors has traditionally been thought to arise

from the establishment of common, mutual, or joint knowledge (Lewis, 1969;

McCarthy, 1990; Schiffer, 1972). Perhaps the most influential example of

this approach is Clark and Marshall’s (1981) argument that successful reference

depends on the speaker and the listener inferring mutual knowledge about

the circumstances surrounding the reference. Thus, for a female speaker

to be certain that a male listener understands what is meant by "the movie

at the Roxy," she needs to know what he knows and what he knows that she

knows, and so forth. Likewise, for him to be certain about what she means

by "the movie at the Roxy," he needs to know what she knows and what she

knows that he knows, and so forth. However, there is no foolproof procedure

for establishing mutual knowledge expressed in terms of this iterative

formulation, because it requires formulating recursive models of interlocutors’

beliefs (see Barwise, 1989; Clark, 1996, ch. 4; Halpern & Moses, 1990;

Lewis, 1969). Therefore, Clark and Marshall (1981) suggested that interlocutors

instead infer what Stalnaker (1978) called the common ground. Common ground

reflects what can reasonably be assumed to be known to both interlocutors

on the basis of the evidence at hand. This evidence can be non-linguistic

(e.g., if both know that they come from the same city they can assume a

degree of common knowledge about that city; if both admire the same view

and it is apparent to both that they do so, they can infer a common perspective),

or can be based on the prior conversation.

Even though inferring common ground is computationally more feasible

than inferring the iterative formulation of mutual knowledge, it still

requires the interlocutor to maintain a very complex situation model that

reflects both his own knowledge and the knowledge that he assumes to be

shared with his partner. To do this, he has to keep track of the knowledge

state of the interlocutor in a way that is separate from his own knowledge

state. This is a very stringent requirement for routine communication,

in part because he has to make sure that this model is constantly updated

appropriately (e.g., Halpern & Moses, 1990).

In contrast, the interactive alignment model proposes that the fundamental

mechanism that leads to alignment of situation models is automatic. Specifically

the information that is shared between the interlocutors constitutes what

we call an implicit common ground. When interlocutors are well aligned,

the implicit common ground is extensive. Unlike common ground, implicit

common ground does not derive from interlocutors explicitly modeling each

other’s beliefs. Implicit common ground is therefore built up automatically

and is used in straightforward processes of repair. Interlocutors do of

course make use of (full) common ground on occasion, but it does not form

the basis for alignment.

Implicit common ground is effective because an interlocutor builds up

a situation model that contains (or at least foregrounds) information that

the interlocutor has processed (either by producing that information or

comprehending it). But since the other interlocutor is also present, he

comprehends what the first interlocutor produces and vice versa. This means

that both interlocutors foreground the same information, and therefore

tend to make the same additions to their situation models. Of course each

interlocutor’s situation model will contain some information that he is

aware of but the other interlocutor is not, but as the conversation proceeds

and more information is added, the amount of information that is not shared

will be reduced. Hence the implicit common ground will be extended. Notice

that there is no need to infer the situation model of one’s interlocutor.

This account predicts that speakers only automatically adapt their utterances

when the information can be accessed from their own situation model. However,

because access is from aligned representations which reflect the implicit

common ground these adaptations will normally be helpful incidentally for

the listener. This point was first made by Brown and Dell (1987), who noted

that if speaker and listener have very similar representations of a situation,

then most utterances that appear to be sensitive to the mental state of

the listener may in fact be produced without reference to the listener.

This is because what is easily accessible for the speaker will also be

easily accessible for the listener. In fact, the better aligned speaker

and listener are, the closer such an implicit common ground will be to

the full common ground, and the less effort need be exerted to support

successful communication.

Hence, we argue that interlocutors do not need to monitor and develop

full common ground as a regular, constant part of routine conversation,

as it would be unnecessary and far too costly. Establishment of full common

ground is, we argue, a specialized and non-automatic process that is used

primarily in times of difficulty (when radical misalignment becomes apparent).

We now argue that speakers and listeners do not routinely take common ground

into account during initial processing. We then discuss interactive repair,

and suggest that full common ground is only used when simpler mechanisms

are ineffective.

4.2 Limits on common ground inference

Studies of both production and comprehension in situations where there

is no direct interaction (i.e., situations which do not allow feedback)

indicate that language users do not always take common ground into account

in producing or interpreting references. For example, Horton and Keysar

(1996) found that speakers under time pressure did not produce descriptions

that took advantage of what they knew about the listener’s view of the

relevant scene. In other words, the descriptions were formulated with respect

to the speaker’s current knowledge of the scene rather than with respect

to the speaker and listener’s common ground. Keysar, Barr, Balin, and Paek

(1998) found that in visually searching for a referent for a description

listeners are just as likely to initially look at things that are not part

of the common ground as things that are, and Keysar, Barr, Balin, and Bruner

(2000) found that listeners initially considered objects that they knew

were not visible to their conversational partner. In a similar vein, Brown

and Dell (1987) showed that apparent listener-directed ellipsis was not

modulated by information about the common ground between speaker and listener,

but rather was determined by the accessibility of the information for the

speaker alone (though cf. Lockridge & Brennan, 2002, and Schober &

Brennan, in press, for reservations). Finally, Ferreira and Dell (2000)

found that speakers did not try to construct sentences that would make

comprehension easy (i.e., by preventing syntactic misanalysis on the part

of the listener).

Even in fully interactive dialogue it is difficult to find evidence

for direct listener modeling. For example, it was originally thought that

articulation reduction might reflect the speaker’s sensitivity to the listener’s

current knowledge (Lindblom, 1990). However, Bard et al. (2000) found that

the same level of articulation reduction occurred even after the speaker

encountered a new interlocutor. In other words, degree of reduction seemed

to be based only on whether the reference was given information for the

speaker and not on whether it was part of the common ground. Additionally,

speakers will sometimes use definite descriptions (to mark the referent

as given information; Haviland & Clark, 1974) when the referent is

visible to them, even when they know it is not available to their interlocutor

(Anderson & Boyle, 1994).

Nevertheless, under certain circumstances interlocutors do engage in

strategic inference relating to (full) common ground. As Horton and Keysar

(1996) found, with less time pressure speakers often do take account of

common ground in formulating their utterances. Keysar et al. (1998) argued,

that listeners can take account of common ground in comprehension under

circumstances in which speaker/listener perspectives are radically different

(see also Brennan & Clark, 1996; Schober & Brennan, in press),

though they proposed that this occurs at a later monitoring stage, in a

process that they called perspective adjustment. More recently, Hanna,

Tanenhaus, and Trueswell (2001) found that listeners looked at an object

in a display less if they knew that the speaker did not know of the object’s

existence than otherwise (see Nadig & Sedivy, 2002, for a related study

with 5-6 year old children). These differences emerged during the earliest

stages of comprehension, and therefore suggest that the strongest form

of perspective adjustment cannot be correct. However, their task was repetitive

and involved a small number of items, and listeners were given explicit

information about the discrepancies in knowledge. Under such circumstances,

it is not surprising that listeners develop strategies that may invoke

full common ground. During natural dialogue, we predict that such strategies

will not normally be used.

In conclusion, we have argued that performing inferences about common

ground is an optional strategy that interlocutors employ only when resources

allow. Critically, such strategies need not always be used, and most "simple"

(e.g., dyadic, non-didactic, non-deceptive) conversation works without

them most of the time.

4.3 Interactive repair using implicit common ground

Of course, the automatic process of alignment does not always lead to

appropriately aligned representations. When interlocutors’ representations

are not properly aligned, the implicit common ground is faulty. We argue

that they employ an interactive repair mechanism which helps to maintain

the implicit common ground. The mechanism relies on two processes: (1)

checking whether one can straightforwardly interpret the input in relation

to one’s own representation, and (2) when this fails, reformulating the

utterance in a way that leads to the establishment of implicit common ground.

Importantly, this mechanism is iterative, in that the original speaker

can then pick up on the reformulation and, if alignment has not been established,

reformulate further.

Consider again the example in Table 1. Throughout this section of dialogue

A and B assume subtly different interpretations for two

along. A interprets two along by counting the boxes on

the maze, whereas B is counting the links between the boxes (see

Fig. 1). This misalignment arises because the two speakers represent the

meaning of expressions like two along differently in this context.

In other words, the implicit common ground is faulty.

Therefore, the players engage in interactive repair, first by determining

that they cannot straightforwardly interpret the input, and then by reformulation.

The reformulation can be a simple repetition with rising intonation (as

in 7), a repetition with an additional query (as when B says "two

along from the bottom, which side?" in 5), or a more radical restatement

(as when A reformulates "two along" as "second box" in 6). Such

reformulation is very common in conversation and is described by some linguists

as clarification request (See Ginzburg, 2001). None of these reformulations

requires the speaker to take into account the listener’s situation model.

They simply reflect failures to understand what the speaker is saying in

relation to the listener’s own model.

They serve to throw the problem back to the interlocutor who can then

attempt a further simple reformulation if he still fails to understand

the description. For example, B says "… you’re one along, one up?"

(41), which A reformulates as "Two along …" (42). Probably because

of this reformulation, A then asks the clarification request "You’re

two along." The cycle continues until the misalignment has been resolved

in (44) when A is able to complete B’s utterance without

further challenge (for discussion of such embedded repairs see also Jefferson,

1987). This repair process can be regarded as involving a kind of dialogue

inference, but notice that it is externalized, in the sense that it can

only operate via the interaction between the interlocutors. It contrasts

with the kind of discourse inference that occurs during text comprehension

(or listening to a speech), where the reader has to mentally infer the

writer’s meaning (e.g., via a bridging inference; Haviland & Clark,

1974).

4.4 Interactive repair using full common ground

Interactive repair using implicit common ground is basic because it

only relies on the speaker checking the conversation in relation to his

own knowledge of the situation. Of course there will be occasions when

a more complicated and strategic assessment of common ground may be necessary,

most obviously when the basic mechanism fails. In such cases, the listener

may have to draw inferences about the speaker (e.g., "She has referred

to John; does she mean John Smith or John Brown? She knows both, but thinks

I don’t know Brown, hence she probably means Smith."). Such cases may of

course involve internalized inference, in a way that may have more in common

with text comprehension than with most aspects of everyday conversation.

But interlocutors may also engage in explicit negotiation or discussion

of the situation models. This appears to occur in our example when A

says "I take it we’ve got identical mazes" (8).

Use of full common ground is particularly likely when one speaker is

trying to deceive the other or to conceal information (e.g., Clark &

Schaefer, 1987), or when interlocutors deliberately decide not to align

at some level (e.g., because each interlocutor has a political commitment

to a different referring expression; Jefferson, 1987). Such cases may involve

complex (and probably conscious) reasoning, and there may be great differences

between people’s abilities (e.g., between those with and without an adequate

‘theory of mind’; Baron-Cohen, Tager-Flusberg, & Cohen, 2000). For

example, Garrod and Clark (1993) found that younger children could not

circumvent the automatic alignment process. Seven year old maze game players

failed to introduce new description schemes when they should have done

so, because they could not overcome the pressure to align their description

with the previous one from the interlocutor. By contrast, older children

and adults were twice as likely to introduce a new description scheme when

they had been unable to understand their partner’s previous description.

Whereas the older children could adopt a strategy of non-alignment when

appropriate, the younger children seemed unable to do so. Our claim is

that these strategic processes are overlaid on the basic interactive alignment

mechanism. However, such strategies are clearly costly in terms of

processing resources and may be beyond the abilities of less skilled language

users.

The strategies discussed above relate specifically to alignment (either

avoiding it or achieving it explicitly), but of course many aspects of

dialogue serve far more complicated functions. Thus, a speaker can attempt

to produce a particular emotional reaction in the listener by an utterance,

or persuade the listener to act in a particular way or to think in depth

about an issue (e.g., in expert-novice interactions). Likewise, the speaker

can draw complex inferences about the mental state of the listener and

can try to probe this state by interrogation. Thus, it is important to

stress that we are proposing interactive alignment as the primitive mechanism

underlying dialogue, not a replacement for the more complicated strategies

that conversationalists may employ on occasion.

Nonetheless, we claim that normal conversation does not routinely require

modeling the interlocutor’s mind. Instead, the overlap between interlocutors’

representations is sufficiently great that a specific contribution by the

speaker will either trigger appropriate changes in the listener’s representation,

or will bring about the process of interactive repair. Hence, the listener

will retain an appropriate model of the speaker’s mind, because, in

all essential respects, it is the listener’s representation as well.

Processing monologue is quite different in this respect. Without automatic

alignment and interactive repair the listener can only resort to costly

bridging inferences whenever he fails to understand anything. And, to ensure

success, the speaker will have to design what he says according to what

he knows about the audience (see Clark & Murphy, 1982). In other words,

he will have to model the mind or minds of the audience. Interestingly,

Schober (1993) found that speakers in monologue were more likely to adopt

a listener-oriented reference frame than speakers in dialogue, and that

this was costly. Because adopting the listener’s perspective can be very

complex (e.g., if different members of the audience are likely to know

different amounts), it is not surprising that people’s skill at public

speaking differs enormously, in sharp contrast to everyday conversation.

5. ALIGNMENT AND ROUTINIZATION

The process of alignment means that interlocutors draw upon representations

that have been developed during the dialogue. Thus it is not always necessary

to construct representations that are used in production or comprehension

from scratch. This perspective radically changes our accounts of language

processing in dialogue. One particularly important implication is that

interlocutors develop and use routines (set expressions) during a particular

interaction. Most of this section addresses the implications of this perspective

for language production, where they are perhaps most profound. We then

turn more briefly to language comprehension.

5.1. Speaking: Not necessarily from intention to articulation.

The seminal account of language production is Levelt's (1989) book Speaking,

which has the informative subtitle From intention to articulation.

Chapter by chapter, Levelt describes the stages involved in the process

of language production, starting with the conceptualization of the message,

through the process of formulating the utterance as a series of linguistic

representations (representing grammatical functions, syntactic structure,

phonology, metrical structure, etc.), through to articulation. The core

assumption is that the speaker necessarily goes through all of these stages

in a fixed order. The same assumption is common to more specific models

of word production (e.g., Levelt et al., 1999) and sentence production

(e.g., Bock & Levelt, 1994; Garrett, 1980). Experimental research is

used to back up this assumption. In most experiments concerned with understanding

the mechanisms underlying language production, the speaker is required